一. 環境說明1. 服務器3 台,一台安裝apache 服務,兩台安裝tomcat

2. 使用到的各個組件及下載地址:

apache_2.2.11-win32-x86-no_ssl.msi

http://httpd.apache.org/download.cgi tomcat 6 壓縮版

http://tomcat.apache.org/download-60.cgi apache 的JK 連接器(Windows 版本),作為apache 的一個module ,網站同時提供了配置文件的使用方法

mod_jk-1.2.28-httpd-2.2.3.so

http://www.apache.org/dist/tomcat/tomcat-connectors/jk/binaries/win32/jk-1.2.28/ 3.ip 配置

一台安裝apache 的ip 為192.168.1.50 ,兩台安裝tomcat 的ip 分別為192.168.1.24 和192.168.1.52 (根據各自情況分配)

二.安裝過程

1. 在兩台安裝tomcat 的機器上安裝jdk6 (至少jdk5 )

2. 配置jdk 的安裝路徑, 在環境變量path 中加入jdk 的bin 路徑, 新建環境變量JAVA_HOME 指向jdk 的安裝路徑

3. 安裝tomcat ,並測試tomcat 的啟動是否正常

http://192.168.1.24:8080 http://192.168.1.50:8080

三.負載均衡配置過程

1. 在192.168.1.50 機器上安裝apache 服務,我的安裝路徑默認為:D:/Program

Files/Apache Software Foundation/Apache2.2

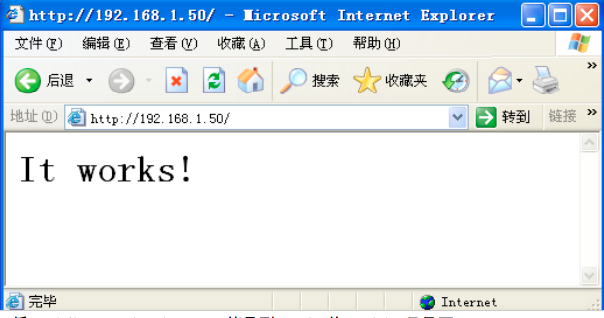

2. 安裝完成後測試能否正常啟動,

http://192.168.1.50 ,如圖所示:

3. 將mod_jk-1.2.28-httpd-2.2.3.so 拷貝到apache 的modules 目錄下

2. 修改apache 的配置文件 httpd.cof ,在最後加上下面這段話:

LoadModule jk_module modules/mod_jk-1.2.28-httpd-2.2.3.so # 載入jk 連接器

JkWorkersFile conf/workers.properties # 設置負載均衡的配置文件,即定義均衡規則

JkLogFile logs/mod_jk.log # 指定日誌文件

JkLogLevel debug # 指定日誌級別

# 配置apache 將哪些請求轉發給JK 進行均衡

JkMount /*.jsp loadbalancer

JkMount /test/* loadbalancer

3. 分別修改兩個tomcat 的配置文件conf/server.xml 文件

修改前:

<!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html -->

<!-- You should set jvmRoute to support load-balancing via AJP ie :

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">

-->

<Engine name="Catalina" defaultHost="localhost">

修改後:

<!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html -->

<!-- You should set jvmRoute to support load-balancing via AJP ie : -->

<Engine name="Catalina" defaultHost="localhost" jvmRoute="node1">

<Engine name="Catalina" defaultHost="localhost" >

將其中的jvmRoute="jvm1" 分別修改為jvmRoute="node1" 和jvmRoute="node2"

4. 在apache 的conf 目錄下創建workers.property 文件,輸入以下內容:

# fine the communication channel

# 定義負載均衡的所有主機名,和前面tomcat 配置文件的JVMroute 屬性相對應

# 其中的loadbalancer 是虛擬的主機,負責負載均衡,姑且當成是apache 本身

worker.list=node1,node2,loadbalancer

# node1 使用AJP 與JK 通訊

worker.node1.port=8009 # 工作端口,若沒佔用則不用修改

worker.node1.host=192.168.1.24 # node1 的地址為localhost ,如果tomcat 在其他服務器則輸入該服務器的地址

worker.node1.type=ajp13 # 類型

worker.node1.lbfactor=100 # 負載平衡因數

worker.node2.port=9009 # 工作端口,若沒佔用則不用修改

worker.node2.host=192.168.1.52 #node2 服務器的地址

worker.node2.type=ajp13 # 類型

worker.node2.lbfactor=100 # 負載因子,值越大則JK 分發到此tomcat 的負荷越多

# 定義loadbalancer 類型為「負載均衡器(lb )」

worker.loadbalancer.type=lb

# 定義loadbalancer 進行負載均衡的對象

worker.loadbalancer.balanced_workers=node1,node2

worker.loadbalancer.sticky_session=false

worker.loadbalancer.sticky_session_force=false

5. 在兩個tomcat 的安裝目錄中的webapps 建立相同的應用,我的應用名為test ,在兩個應用目錄中建立相同WEB-INF 目錄和頁面test.jsp 的頁面內容如下

<%@ page language = "java" contentType = "text/html;

charset=ISO-8859-1"

pageEncoding = "GBK" %>

<! DOCTYPE html PUBLIC "-//W3C//DTD

HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd" >

< html >

< head >

< meta http-equiv = "Content-Type" content = "text/html;

charset=ISO-8859-1" >

< title > helloapp </ title >

</ head >

< body >

<%

System.out.println( "call test.jsp" ); // 在Tomcat 控制台上打印一些跟蹤數據

%>

SessionID: <%= session.getId() %>

</ body >

</ html >

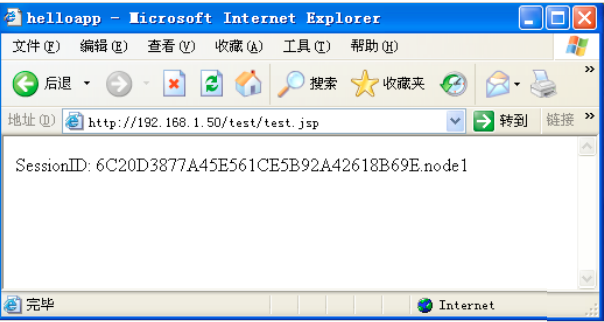

6. 重啟 apache 服務器和兩個 tomcat 服務器,到此負載均衡已經配置完成,測試負載均衡:

http://192.168.1.50/test/test.jsp , 運行正常則已建立負載均衡

四.集群配置

1. 負載均衡的條件下配置tomcat 集群

2 .分別修改兩個tomcat 的配置文件conf/server.xml, 修改內容如下

修改前:

<!--

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>

-->

修改後:

< Cluster className = "org.apache.catalina.ha.tcp.SimpleTcpCluster"

channelSendOptions = "8" >

< Manager className = "org.apache.catalina.ha.session.DeltaManager"

expireSessionsOnShutdown = "false" notifyListenersOnReplication = "true" />

< Channel className = "org.apache.catalina.tribes.group.GroupChannel" >

< Membership

className = "org.apache.catalina.tribes.membership.McastService"

bind = "192.168.1.100" address = "228.0.0.4" port = "45564" frequency = "500"

dropTime = "3000" />

< Receiver

className = "org.apache.catalina.tribes.transport.nio.NioReceiver"

address = "auto" port = "4000" autoBind = "100" selectorTimeout = "5000"

maxThreads = "6" />

< Sender

className = "org.apache.catalina.tribes.transport.ReplicationTransmitter" >

< Transport

className = "org.apache.catalina.tribes.transport.nio.PooledParallelSender" />

</ Sender >

< Interceptor

className = "org.apache.catalina.tribes.group.interceptors.TcpFailureDetector" />

< Interceptor

className = "org.apache.catalina.tribes.group.interceptors.MessageDispatch15Interceptor" />

</ Channel >

< Valve className = "org.apache.catalina.ha.tcp.ReplicationValve" filter = "" />

< Valve className = "org.apache.catalina.ha.session.JvmRouteBinderValve" />

< Deployer className = "org.apache.catalina.ha.deploy.FarmWarDeployer"

tempDir = "/tmp/war-temp/" deployDir = "/tmp/war-deploy/"

watchDir = "/tmp/war-listen/" watchEnabled = "false" />

< ClusterListener

className = "org.apache.catalina.ha.session.JvmRouteSessionIDBinderListener" />

< ClusterListener

className = "org.apache.catalina.ha.session.ClusterSessionListener" />

</ Cluster >

注意:bind 是tomcat 服務器所在的機器的ip 地址

3. 重啟兩個tomcat ,到此tomcat 的集群配置完成

五.應用配置

對於要進行負載和集群的的tomcat 目錄下的webapps 中的應用中的WEB-INF 中的web.xml 文件要添加如下一句配置

<distributable/>

配置前:

<? xml version = "1.0" encoding = "UTF-8" ?>

< web-app id = "WebApp_ID" version = "2.4"

xmlns = "http://java.sun.com/xml/ns/j2ee"

xmlns:xsi = "http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation = "http://java.sun.com/xml/ns/j2ee

http://java.sun.com/xml/ns/j2ee/web-app_2_4.xsd" >

< display-name > test </ display-name >

< distributable />

< welcome-file-list >

< welcome-file > index. html </ welcome-file >

< welcome-file > index. htm </ welcome-file >

< welcome-file > index. jsp </ welcome-file >

< welcome-file > default. html </ welcome-file >

< welcome-file > default. htm </ welcome-file >

< welcome-file > default. jsp </ welcome-file >

</ welcome-file-list >

</ web-app >

六.測試集群

重新啟動apache 服務器和兩個tomcat 服務器,輸入地址:

http://192.168.1.50/test/test.jsp 運行結果如下:

如果同一個瀏覽器的sessionID 不變,則集群配置成功